Learning to Secure Cooperative Multi-Agent Learning Systems: Advanced Attacks and Robust Defenses

Personnel

- Principal Investigator: Zizhan Zheng

- Graduate Students: Henger Li, Xiaolin Sun

Goals

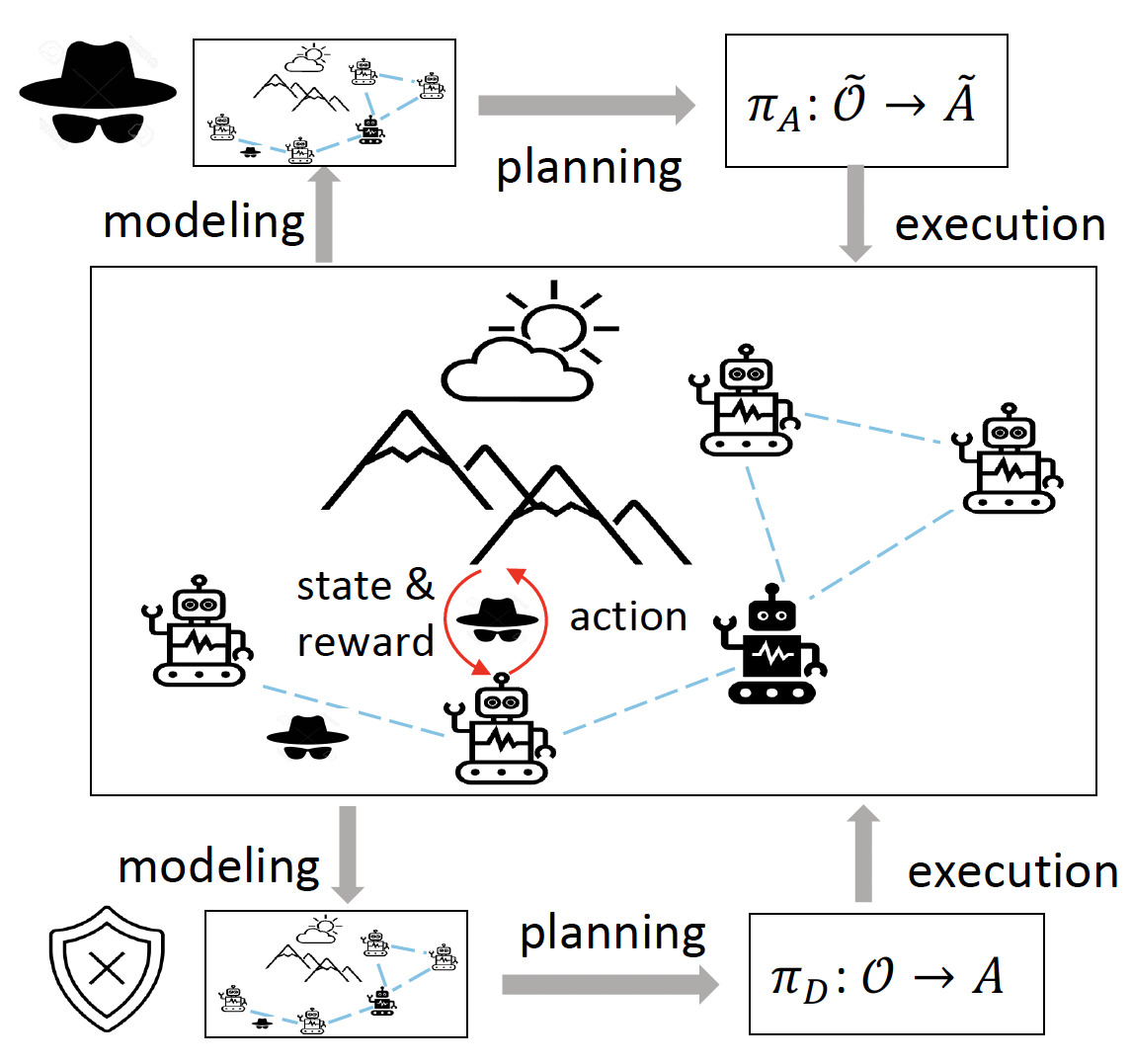

Cooperative multi-agent learning (MAL), where multiple intelligent agents learn to coordinate with each other and with humans, is emerging as a promising paradigm for solving some of the most challenging problems in various security and safety-critical domains, including transportation, power systems, robotics, and healthcare. The decentralized nature of MAL systems and agents' exploration behavior, however, introduce new vulnerabilities unseen in standalone machine learning systems and traditional distributed systems. This project aims to develop a data-driven approach to MAL security that can provide an adequate level of protection even in the presence of persistent, coordinated, and stealthy insiders or external adversaries. The main novelty of the project is to go beyond heuristics-based attack and defense schemes by incorporating opponent modeling and adaptation into security-related decision-making in a principled way. The project contributes to the emerging fields of science of security and trustworthy artificial intelligence via a cross-disciplinary approach that integrates cybersecurity, multi-agent systems, machine learning, and cognitive science.

Cooperative multi-agent learning (MAL), where multiple intelligent agents learn to coordinate with each other and with humans, is emerging as a promising paradigm for solving some of the most challenging problems in various security and safety-critical domains, including transportation, power systems, robotics, and healthcare. The decentralized nature of MAL systems and agents' exploration behavior, however, introduce new vulnerabilities unseen in standalone machine learning systems and traditional distributed systems. This project aims to develop a data-driven approach to MAL security that can provide an adequate level of protection even in the presence of persistent, coordinated, and stealthy insiders or external adversaries. The main novelty of the project is to go beyond heuristics-based attack and defense schemes by incorporating opponent modeling and adaptation into security-related decision-making in a principled way. The project contributes to the emerging fields of science of security and trustworthy artificial intelligence via a cross-disciplinary approach that integrates cybersecurity, multi-agent systems, machine learning, and cognitive science.

Tasks

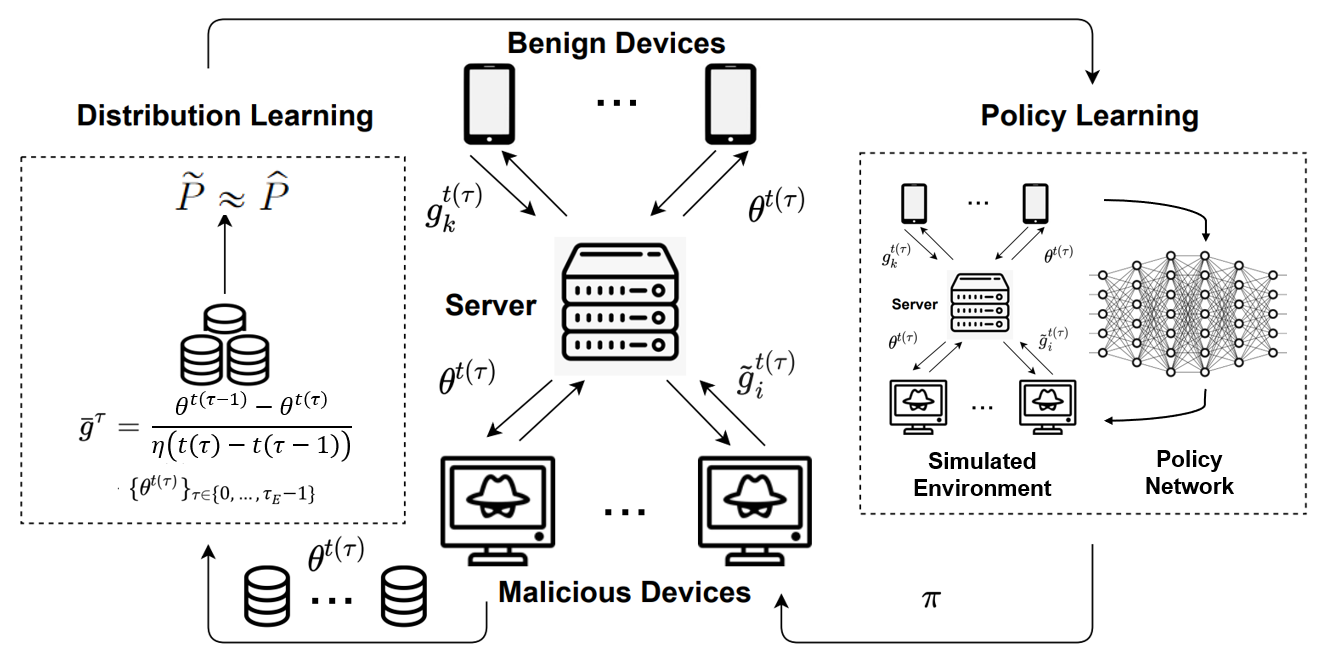

First, we develop learning-based targeted and untargeted attacks against federated and decentralized machine learning.

These attacks first infer a "world model" from publicly available data and then apply reinforcement learning with properly defined state and action spaces and reward function

to identify an adaptive attack policy that can fully exploit the system vulnerabilities.

First, we develop learning-based targeted and untargeted attacks against federated and decentralized machine learning.

These attacks first infer a "world model" from publicly available data and then apply reinforcement learning with properly defined state and action spaces and reward function

to identify an adaptive attack policy that can fully exploit the system vulnerabilities.

Second, we investigate Stackelberg Markov games with asymmetric observations as a principled framework for achieving proactive defenses for cooperative learning systems against unknown/uncertain attacks. Our defenses integrate adversarial training and meta-learning by utilizing the automated attack framework developed in the first task as a simulator of (strong) adversaries.

Third, we study security in multi-agent reinforcement learning systems by addressing a set of new challenges, including complicated interactions among agents, non-stationarity, and partial observability. The goal is to understand how malicious attacks and deceptions can prevent benign agents from reaching a socially preferred outcome and how accounting for a higher order of beliefs can help an agent (benign or malicious) in both fully cooperative and mixed-motive settings.

Publications

- Belief-Enriched Pessimistic Q-Learning against Adversarial State Perturbations [code]

Xiaolin Sun and Zizhan Zheng

International Conference on Learning Representations (ICLR), May 2024. - Robust Q-Learning against State Perturbations: a Belief-Enriched Pessimistic Approach

Xiaolin Sun and Zizhan Zheng

NeurIPS Workshop on Multi-Agent Security: Security as Key to AI Safety (MASEC), Dec., 2023. - Pandering in a (Flexible) Representative Democracy

Xiaolin Sun, Jacob Masur, Ben Abramowitz, Nicholas Mattei, and Zizhan Zheng

Conference on Uncertainty in Artificial Intelligence (UAI), July 2023. - A First Order Meta Stackelberg Method for Robust Federated Learning

Yunian Pan, Tao Li, Henger Li, Tianyi Xu, Quanyan Zhu, and Zizhan Zheng

ICML Workshop on New Frontiers in Adversarial Machine Learning (AdvML-Frontiers), July 2023. - Learning to Backdoor Federated Learning [code]

Henger Li, Chen Wu, Sencun Zhu, and Zizhan Zheng

ICLR 2023 Workshop on Backdoor Attacks and Defenses in Machine Learning (BANDS), May 2023. - Does Delegating Votes Protect Against Pandering Candidates? (Extended Abstract)

Xiaolin Sun, Jacob Masur, Ben Abramowitz, Nicholas Mattei, and Zizhan Zheng

International Conference on Autonomous Agents and Multi-Agent Systems (AAMAS), May 2023. - Learning to Attack Federated Learning: A Model-based Reinforcement Learning Attack Framework [code]

Henger Li*, Xiaolin Sun*, and Zizhan Zheng (*Co-primary authors)

Conference on Neural Information Processing Systems (NeurIPS), Dec. 2022. - Robust Moving Target Defense against Unknown Attacks: A Meta-Reinforcement Learning Approach [code]

Henger Li and Zizhan Zheng

Conference on Decision and Game Theory for Security (GameSec), Oct. 2022.

Support

The project is funded by National Science Foundation (NSF) CAREER award CNS-2146548.Disclaimer: Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.